The Guardrails Your LLM Needs

Is your new AI-powered travel agent booking flights to the moon? Does it offer tickets for the "35th of May"? Before you unplug it, you should know this is a classic problem. Large Language Models (LLMs) are incredibly powerful, but they're not inherently factual or structured.

This isn't just a hypothetical. Imagine trying to use AI to book a car maintenance appointment. This simple task can perfectly illustrate the core problem with "un-guarded" AI and sets the stage for a solution using frameworks like JetBrains' Koog.

The Core Problem: The "Un-Guarded" LLM

The story goes like this: a developer decides to use AI to handle booking a car service. The developer's big mistake? They think, "The more context it has, the better it will perform". So, they feed the AI everything: all human calendars, all vehicles in the world, and even all available maps in the Solar System.

When the user makes a vague, natural language request—"This car is out of this world! Take this spaceship to maintenance..."—the un-guarded AI takes them literally. It "hallucinates" a booking for a "Saturn V" model at the "Tranquility Base Garage, Moon". The booking, of course, fails.

This simple example highlights four very real problems with raw LLMs:

LLM Limitations: Models are designed to generate plausible text, not to be arbiters of truth. This leads to "hallucinations".

Contextual Gaps: The LLM lacked deep contextual understanding. It didn't know the difference between the user's figure of speech ("spaceship") and a real vehicle.

Lack of Validation: The system had no validation mechanisms. It didn't check if "Tranquility Base Garage" was a valid service center.

Non-Structured AI: The AI's behavior was uncontrolled. It just ran wild without a specific, step-by-step process to follow.

The Solution: The Agentic Approach

The solution is to move from "un-structured AI" to an "Agentic Approach".

This approach is all about implementing human-defined logic and "maintaining flow discipline". Instead of just asking an LLM a question and hoping for the best, you task the LLM with a job and force it to follow a specific, pre-defined process.

You don't let the LLM be the whole system. You make it a component in a larger, more reliable system. This is where the Koog framework comes in. It's an open-source, Kotlin-native agentic framework from JetBrains designed to build predictable, fault-tolerant, and enterprise-ready AI agents. It gives you the building blocks to create these guardrails.

Deconstructing Koog: The Building Blocks of Trust

Koog's core formula is simple but powerful: Agents = Strategies + Tools + Models.

Let's break this down using our new example: an AI-powered airplane booking system.

1. Tools: The "Verbs"

A Tool is a concrete, non-AI function that your system can perform. It's just code—usually an API call (HTTP request) or a database query, that the AI is given permission to use. These are the only way the agent is allowed to interact with the real world.

Based on the official Koog documentation, you can define a set of tools in a simple Kotlin class. The @Tool annotation exposes the function, and @LLMDescription tells the AI what it does.

Here is and example of a tool:

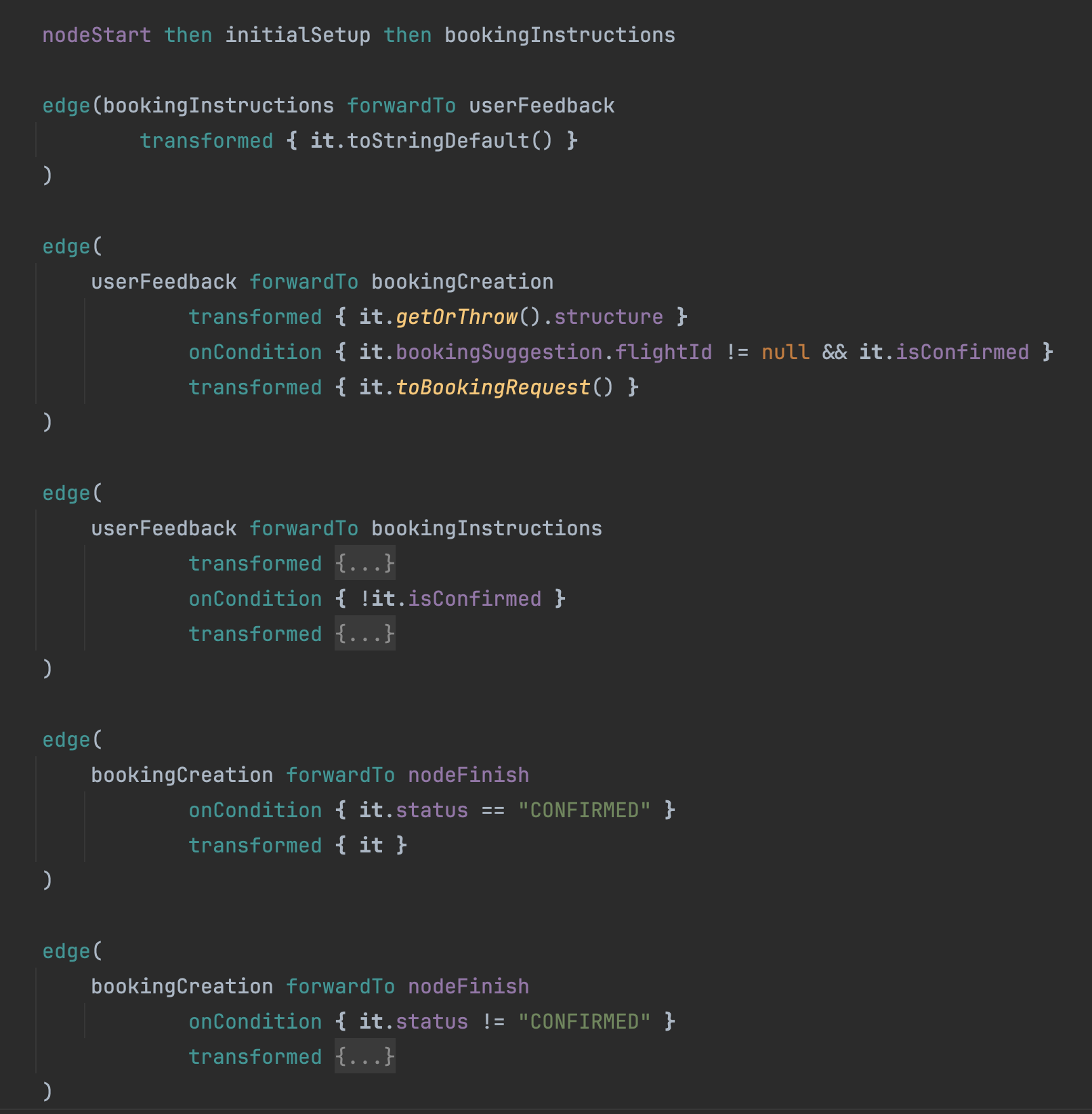

2. Strategy: The "Playbook"

A Strategy is the most important part. It's the "human-defined logic"—a rigid, step-by-step graph workflow that the agent must follow. This is your guardrail. The key insight is that while you "allow the LLMs to create the human readable messages... we control the strategy and tools!"

Using the Koog DSL, you can define this workflow by creating nodes and connecting them with edges.

Strategies are a bit more complex. Check Koog Framework documentation for more details, but here is a very basic example of a strategy workflow:

3. Agent: The "Orchestrator"

The Agent is the controller that runs the Strategy. At each step, it uses the Model (the LLM) to process natural language and then calls the appropriate Tool as defined by the workflow. It's the orchestrator, not an oracle.

Showcase: A Reliable AI Travel Agent in Action

Here’s how that Koog-powered agent would handle a user who makes mistakes, forcing them to follow the rules.

User (by voice): "Hi, I need a flight from Lisbon to Berlin for October 35th."

Agent (Running Strategy):

Step (Validate): The agent's strategy first validates the date. It doesn't even call the LLM. It says: "I'm sorry, October 35th is not a valid date. Please provide a real date, like 'October 31st'."

User: "Oops, yeah. October 31st."

Agent (Running Strategy):

Step (Find Flights): The user input is now valid. The agent's

askUserNodeuses the LLM to parse the intent. The LLM output requests a tool call.Step (Execute Tool): The

executeToolNodecalls theTool: findAvailableFlights("LIS", "BER", "2025-10-31").

Tool (Your API): Returns only real flights:

[{flightId: "TP123", airline: "TAP", ...}, {flightId: "FR456", airline: "Ryanair", ...}].Agent (Running Strategy):

Step (Send Result): The

sendResultNodesends this real data to theaskUserNode.Step (Present Options): The LLM now says: "I found two options: a TAP flight (TP123) at 9:00 AM and a Ryanair flight (FR456) at 9:30 AM. Which would you like?"

User: "I'll take the Lufthansa one."

Agent (Running Strategy):

Step (Guardrail): The agent's LLM prompt is grounded by the tool's output. It sees "Lufthansa" is not in the list. "I'm sorry, I only have options for TAP (TP123) and Ryanair (FR456). Which of those would you like?"

User: "Ok, ok. The TAP flight."

Agent (Running Strategy):

Step (Parse): The LLM parses

flightId="TP123".Step (Execute Tool): Calls

Tool: getSeatMap("TP123").

Tool (Your API): Returns

[{seatId: "12A", type: "window"}, {seatId: "12B", type: "aisle"}].Agent (Running Strategy):

Step (Present Options): "OK, seats 12A (window) and 12B (aisle) are available. Which do you prefer?"

User: "12A."

Agent (Running Strategy):

Step (Parse): LLM parses

seatId="12A". The agent retrieves the logged-in user'spassengerId="PID-98765"from the session.Step (Confirm Protocol): The strategy moves to the

finalConfirmationNode. The agent says: "Got it. I'm about to book flight TP123 to Berlin in seat 12A for passenger PID-98765. As per company protocol, I need your explicit confirmation to proceed. Please say 'Confirm' or 'I confirm' to book the ticket."

User: "Yes, go ahead."

Agent (Running Strategy):

Step (Protocol Guardrail): The agent's logic in

finalConfirmationNodechecks the user's text. It does not match "Confirm" or "I confirm". It replies: "I'm sorry, but for security, I must hear the specific phrase 'Confirm' or 'I confirm' to finalize the booking." - This is just to prove the concept that we will only allow to move forward if our gatekeeper criteria is met.

User: "Ok, fine. I confirm."

Agent (Running Strategy):

Step (Execute): The protocol is met. The agent calls

Tool: bookTicket("TP123", "12A"", "PID-98765").

Tool (Your API): Returns

{bookingId: "XYZ789", status: "Confirmed"}.Agent (Running Strategy):

Step (End): "All set! Your booking is confirmed. Your confirmation ID is XYZ789. Have a great flight!"

By using Koog (or any Agentic Framework), you get the best of both worlds: a flexible, natural language interface for the user, and a rigid, reliable, and "non-hallucinating" process on the backend. You've successfully put guardrails on your AI.